Neural Networks, Deep Learning and Automatic Speech Recognition: How Speech to Text Works

Technical

Technical

As you know, Voci works on automatic speech recognition (ASR). I’ve been with Voci almost since the beginning — nearly 8 years — helping to develop our speech recognition engine. Over those years, I have often been asked about what exactly the engine does. How does speech recognition work?

It is hard for me to come up with a great answer. While ASR is perhaps the earliest use case of deep learning [1], there were (surprisingly) no good, open introductory courses on the topic. This is unlike fields such as computer vision, in which beginners can just take (for example) Stanford CS231N and get a very good picture of the current practice.

Not having these basic materials is a big problem. It makes it hard to explain or describe ASR in an accessible and accurate way. Even a long-time practitioner like me finds it difficult.

If you have no background in AI or deep learning at all, I can’t explain how ASR works without starting from first principles, going all the way back to the basics of probability, and Bayes' rule. I also need to talk about details like why speech recognition needs acoustic and language models in order to function.

If you are into deep neural networks, it’s not much easier. I will have to start with explaining recurrent neural networks (RNN) and Bidirectional Long Short-Term Memory (BLSTM). Or even an alternative (and shinier!) paradigm, such as connectionist temporal classification (CTC)!

It’s all too much — and too hard — as an answer to the original question: how does the speech recognition engine work?

So, we decided to simplify it.

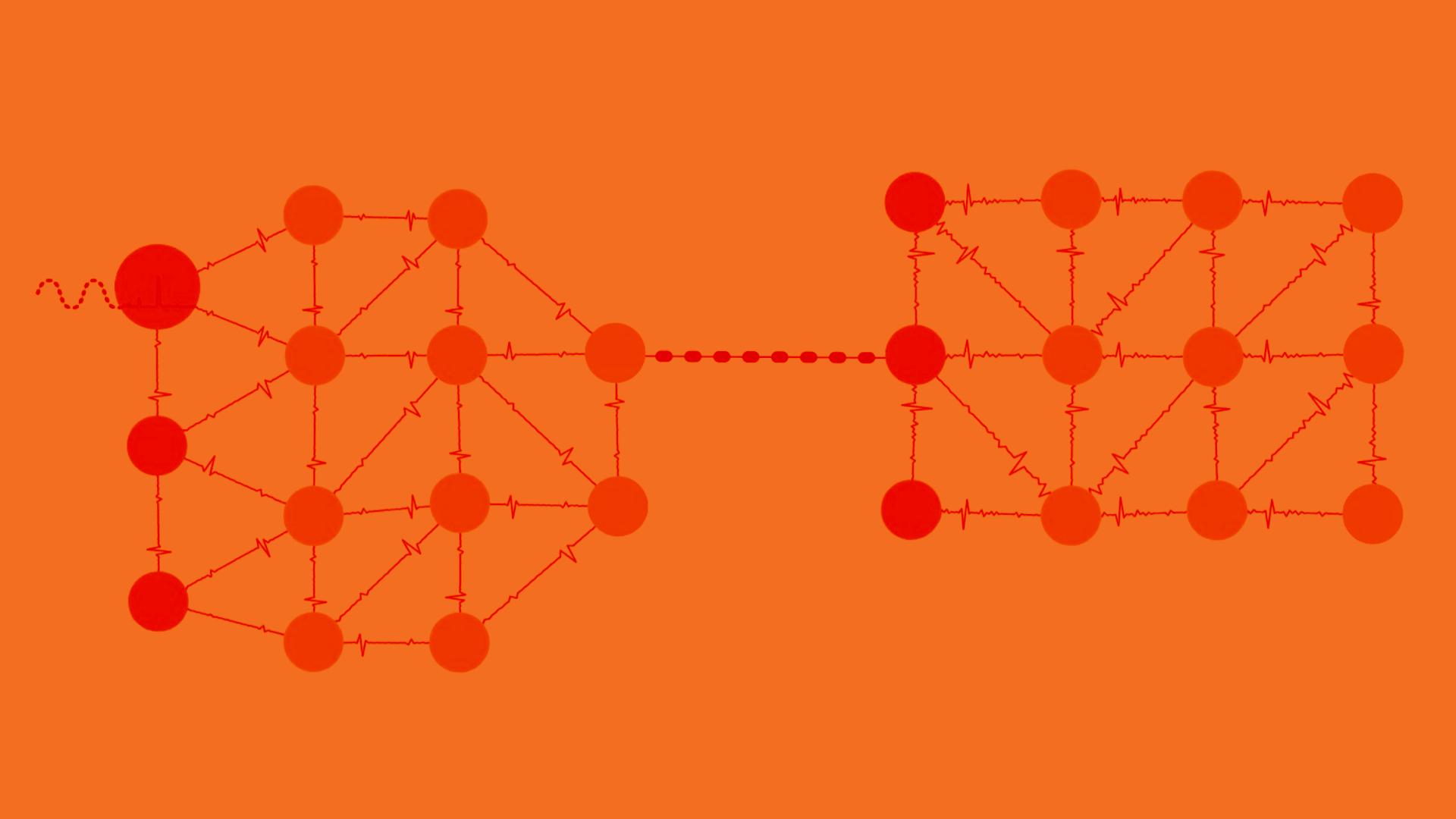

We produced this three-minute video on what conversational artificial intelligence (AI) and ASR are. The video gives you a good feeling of how modern day ASR works — like how the sound is broken down into smaller units, and how models (such as RNN) can model better with memory. It is not meant to be formal, so you’ll probably learn more from it!

[1]: Haven't you read "Deep Neural Networks for Acoustic Modeling in Speech Recognition", by Hinton et al?

With up to 1000 hours of audio at no charge